- Posted on

- No Comments

Sudoba Mirzozoda

Shabnam Zubaidullozoda

Omina Kabirova

Artificial Intelligence (AI) is vital in the present society since they engage it in almost all areas like health, learning, IT security, and caregiver services among others. In order to comprehend AI’s role in today’s world, it still necessary to review its history, its development, the work of such figures as Alan Turing, and the general effects of AI on people.

What is AI?

AI is the reproduction of essential human mental attributes such as rationale and perception, in devices that are designed to act like human beings. These systems can involve activities like interpretation of language, pattern recognition and problem solving as well as creative activities like creation of art work. This raises the key question that has fascinated scholars for centuries: can computers really have a human thought process?

Can Machines Think?

Whoever posed the question, Alan Turing begins the conversation defining and advancing modern Artificial Intelligence. An interest in ‘nonhuman intelligence’ goes back to the Greek philosophers, but the earliest seeds of the kind of artificial intelligence we can see today were sown during the Renaissance, and the field has realistically ‘come alive’ over the last few decades thanks to developments in computational power. Artificial intelligence is on the verge to become a mandatory component in everyone’s daily life encompassing everyone from automotive to communication.

History of AI

The roots of artificial intelligence can be traced back to the mid-20th century, with Alan Turing being the first researcher to introduce the idea. Although the term “Artificial Intelligence” wasn’t coined until that time, the concept of building intelligent systems has fascinated humanity for thousands of years.

The idea of “artificial intelligence” goes back to ancient philosophers who contemplated questions of life and consciousness. In ancient times, inventors created “automatons,” which were mechanical devices designed to move independently of human intervention. The word “automaton” comes from ancient Greek and means “acting of one’s own will.” One of the earliest records of an automaton dates back to 400 BCE and describes a mechanical pigeon created by a friend of the philosopher Plato. Many years later, one of the most famous automatons was built by Leonardo da Vinci around the year 1495.

While the idea of a machine functioning on its own is ancient, this article will focus on the 20th century, when engineers and scientists began making strides toward developing modern-day AI.

The field of AI was officially defined in the 1950s, and since then, it has experienced many ups and downs. In recent decades, AI has made significant progress, but it has also fallen short of fulfilling many of its early promises—at least for now.

Throughout history, scientists and inventors have attempted to build machines that mimic human capabilities, aiming to create self-operating devices.

Early Attempts and Developments in Autonomous Machines and Computing

1206, during the height of the Islamic Golden Age, Ismail Al-Jazari whom some call the ‘Father of Robotics’ built a musical device—essentially an early robot—and authored The Book of Knowledge of Ingenious Mechanical Devices. This book detailed hundreds of mechanical inventions and provided instructions on how to operate them.

By the 17th century, various machines were being developed to automate complex calculations, helping to solve large and difficult problems faster and more reliably, freeing humans from performing tedious computations manually.

In 1769, The Turk was presented as an autonomous chess-playing machine capable of defeating any human opponent. However, the machine was a hoax; a hidden chess grandmaster operated it from within, making all the moves. Despite the deception, the illusion was successful because it built on the idea that a machine might someday be able to play and win logical games against humans.

In 1837, Charles Babbage designed the Analytical Engine, the first general-purpose computer. It contained many conceptual elements still seen in modern computers, such as a program, data, and arithmetic operations, all recorded on punch cards.

Later, Ada Lovelace developed the first algorithm for this hypothetical machine, creating what is considered the first computer software.

Alan Turing and the Birth of Artificial Intelligence

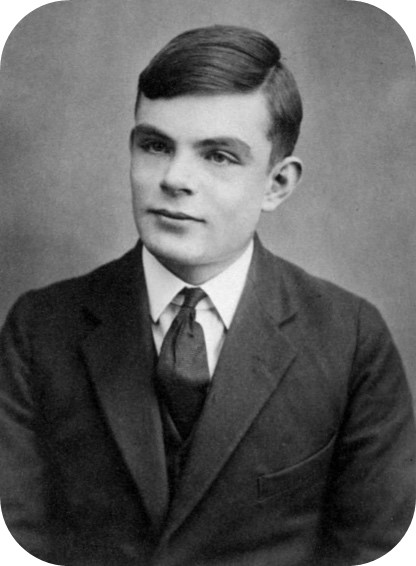

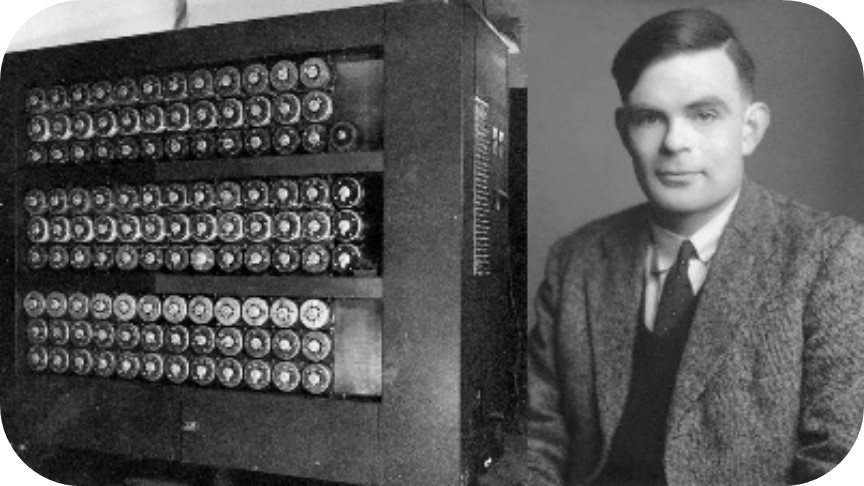

At a time when computing capacity was still largely reliant on human brains, the great British mathematician Alan Turing, often considered the father of computer science and artificial intelligence, laid the groundwork for these fields long before technology could fully support his ideas.

During the mid-20th century, computers were in their infancy, far from the capabilities needed to explore his theories in depth. Despite these limitations, Turing is credited with the first serious conceptualization of machine intelligence, which eventually evolved into what we now know as artificial intelligence.

One of his most significant contributions was developing a method to evaluate whether a machine could exhibit human-like thought. This method, initially called “the imitation game,” is now widely known as the Turing Test.

In this test, a human interacts with both a machine and another human through written communication, without knowing which is which. If the machine’s responses are convincing enough that the human judge cannot reliably differentiate between the machine and the human, the machine is said to have demonstrated a level of intelligence akin to human thought. The Turing Test remains a foundational concept in AI to this day.

The Dartmouth Conference and the Birth of Artificial Intelligence (1956)

In the summer of 1956, Dartmouth mathematics professor John McCarthy invited a small group of researchers from various disciplines to participate in a summer-long workshop focused on investigating the possibility of “thinking machines.” This group believed that “every aspect of learning or any other feature of intelligence can, in principle, be so precisely described that a machine can be made to simulate it.” The conversations and work they undertook that summer are largely credited with founding the field of artificial intelligence.

During the Dartmouth Conference, two years after Turing’s death, McCarthy conceived the term that would come to define the practice of human-like machines. In outlining the purpose of the workshop, he used the term “artificial intelligence”, which is still in use today.

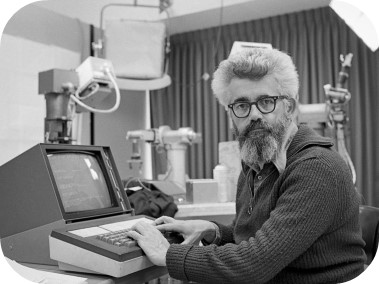

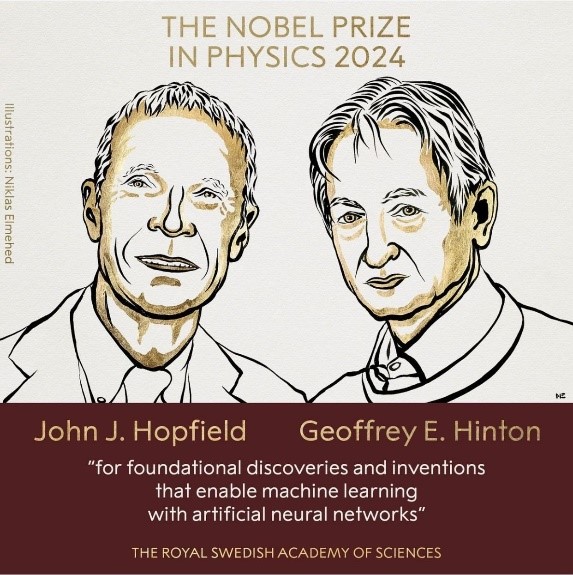

(progress) The very first chatbot called ELIZA was developed in 1966 by the MIT computer scientist named Joseph Weizenbaum. Based on counterargument, ELIZA was programmed to mimic the function of a therapist by practicing the kind of dialog called the Rogerian argument. Without any doubt, Geoffrey Hinton – one of the leaders in the development of neural networks – has made a great contribution to the development of artificial intelligence, which culminated with a 2023 Nobel Prize in Physiology or Medicine. ELIZA and Hinton are two pin-points that describe the broad way of AI development starting from the creation of simple conversation imitating models up to such essential technologies that have changed numerous people’s views on the neural networks and interfaces, as well as the possibilities of artificial intelligence.

Geoffrey Hinton: Advancing Neural Networks and Nobel Recognition

Several experts widely recognize Geoffrey Hinton as one of the precursors of deep learning and refers to him as the ‘Godfather of Deep Learning’ principally due to his immense work on neural networks and deep learning. He made significant research in the 1980s and came up with new method known as backpropagation which was one of the fair techniques for training multi-layer neural networks which was in first introduced by David Rumelhart, Hinton and Ronald J. Williams in 1986. Hinton shared the breakthrough in the computer vision by training deep convolutional neural networks with his students Alex Krizhevsky and Ilya Sutskever when their model ranked first in the 2012 ImageNet competition. This success transformed how image recognition works and for that laid the foundation for the use of deep learning and other applications of AI. Because of his efforts, Hinton received the Turing Award in 2018 in company with Yann LeCun and Yoshua Bengio for deep learning. Most recently, in October 2024, Hinton received the Nobel Prize in Physics together with John Hoppel for the advanced development of the neural networks.

On October 9, 2024, Hinton and his colleague Yann LeCun were awarded the Nobel Prize in Computer Science for their groundbreaking work in neural networks and deep learning. This recognition underscores their pivotal role in advancing AI and shaping its future.

Milestones in AI Development

Alexa, Amazon’s AI based voice commander, was revealed in November 2014 together with the corporation’s smart speaker named Echo. Alexa of course used NLP and machine learning to enable vocal communication, enabling the user to search for information, control other smart devices, listen to music, and perform complicated tasks with a simple vocal command. Equipped with the state of the art noise canceling microphone array, Alexa could understand commands given in loud environment and change the possibilities of voice-controlled technology in homes. Like the conversational systems that Star Trek popularized, Alexa has learned from people’s usage, advancing voice relevance and making artificial intelligence an ubiquitous part of existence. With time, Alexa grew to become a true pillar of Smart home technology and created realizable and convenient AI interfaces for millions of users.

OpenAI and the Rise of Advanced AI Models

OpenAI was established in December 2015 by Sam Altman, Elon Musk, Ilya Sutskever, Greg Brockman, amongst other intelligent minds and the fundamental aim of the organisation was to build AGI that would be beneficial to all humankind. OpenAI’s purpose is to build AI systems that are strong enough to master economically valuable work, perform better than humans, and, at the same time, are safe and reliable. The organization was initiated as a non-profit structure, but it incorporated a for-profit subsidiary called OpenAI LP, to seek funds for the development of massive AI. This change was beneficial with regards to enabling the company to get access to the massive computational needs necessary for AGI, which includes collaborations as Microsoft Azure with regards to cloud computing.

Some of these projects run across multiple fields of research, which are quick reinforcement learning, robotics, AI safety among others, all to enhance the development of artificial intelligence and at the same time making sure that it does not bring harm to society. In 2016, OpenAI released the OpenAI Gym, a suite of reinforcement learning environments through which others could test their algorithms. This work is critical for the creation of such systems that need to learn in the same way as we do, by making errors.

Safety and alignment are also prioritized at the company level, which implies that its models must act in a logically comprehensible manner and be used correctly. Its creations are the models like GPT for the systems like ChatGPT and DALL-E for generating images from text. These technologies have moved forward the AI application in such fields as the healthcare sector, schooling sector, etc correlated with the ways of its open research projects supporting the collaboration among AI.

Such an approach, managing to find the balance between various approaches to innovation and safety, puts OpenAI at the top of the list of AI companies from around the world and with a clear and strong emphasis on the positive utilization of AGI for the benefit of all communities.

The Role of ChatGPT in AI

A pivotal innovation from OpenAI is its Generative Pre-trained Transformer (GPT) models, particularly GPT-3 and GPT-4, which power ChatGPT. Launched in 2020, ChatGPT has revolutionized natural language processing by allowing AI to understand and generate human-like text. It can perform a wide range of tasks, including answering questions, composing essays, solving mathematical problems, and even writing code.

With the release of GPT-4 in 2022, ChatGPT gained enhanced capabilities, including better contextual understanding, improved reasoning, and the ability to handle more complex language tasks. This marked a new era in AI’s interaction with humans, as it became a tool for industries ranging from education to customer service.

Nvidia’s Role in AI Development

One of the most critical factors about AI’s development has been the sophisticated hardware that drives the unprecedented calculation capability for machine learning. The GPU technology has been advanced by Nvidia company that has been supportive of the development of AI. Originally meant for graphics processing, GPU has been found to be very useful for training of deep learning models due to its efficiency in large scale parallelism processing.

The GPUs offered by Nvidia have now become the heart of the current AI and the development of neural networks and machine learning. The company’s AI platforms like CUDA and its DGX platforms are effectively used in research and industrial fields in the globe to train the complex AI models efficiently.

Understanding Images in AI

The basics of AI involve understanding images hence it is a core area of uses such as facial recognition, self-driving cars, and diagnostics among others. One sort of neural network that performs exceptionally well for image analysis is called Convolutional Neural Networks or CNN for short. This has profound implication for some fields such as, medical image analysis and self-driving technology.

Benefits of AI Across Sectors

- Healthcare:

AI helps diagnose diseases, create personalized treatment plans, and predict outcomes. AI systems like IBM Watson can analyze medical images to detect diseases that may be missed by human physicians

- Education:

AI enables personalized learning experiences by adapting to students’ learning styles. Intelligent tutoring systems offer real-time feedback, improving educational outcomes

- Cybersecurity:

AI enhances cybersecurity through proactive threat detection. Machine learning models can identify suspicious patterns in network traffic, preventing potential cyberattacks.

- Personal Assistants:

AI-driven assistants like Alexa and Siri streamline daily tasks using NLP, scheduling meetings, setting reminders, and responding to complex queries.

- Social Companionship:

AI-powered companions are helping alleviate loneliness and social isolation, especially among seniors. These AI systems offer conversation and emotional support, acting as social companions in homes and care facilities.

AI as a Conversation Starter: How Should Society Approach AI?

As AI continues to evolve, society must thoughtfully navigate its implications:

- Ethical Guidelines:

Establishing ethical guidelines for AI development is essential to ensure that AI achieves sustainable technological progress in a moral and just manner. These guidelines should promote responsible innovation and prevent misuse.

- Education and Training:

Employers must invest in education and training programs to prepare the workforce for an AI-driven economy. These programs should focus on developing skills that complement and enhance AI capabilities, ensuring workers can adapt to new technological demands.

- Public Awareness:

Raising public awareness about AI’s capabilities and limitations is crucial for managing expectations. Accurate information helps prevent the formation of unrealistic or exaggerated views about what AI can and cannot do.

- Collaborative Approach:

A collaborative effort among governments, industries, and academic institutions is necessary to advance responsible and effective AI innovations. Synchronizing these entities can maximize social benefits and create a unified strategy for AI development.

AI has the potential to significantly improve various aspects of human life. Therefore, it is vital to harness this potential thoughtfully and ethically, ensuring that AI serves humanity positively. With a proper and ethical approach, the full potential of AI can be realized to advance society

References:

- Bertino, E., & Islam, N. (2017). “Cybersecurity and AI: A New Era.” IEEE Security & Privacy, 15(3), 10-13.

- Carr, N. (2010). The Shallows: What the Internet Is Doing to Our Brains. W.W. Norton & Company.

- Esteva, A., Kuprel, B., Novoa, R. A., et al. (2019). “Dermatologist-level classification of skin cancer with deep neural networks.” Nature, 542(7639), 115-118.

- Frey, C. B., & Osborne, M. A. (2017). “The future of employment: How susceptible are jobs to computerization?” Technological Forecasting and Social Change, 114, 254-280.

- Hinton, G. E., et al. (2012). “ImageNet Classification with Deep Convolutional Neural Networks.” Advances in Neural Information Processing Systems, 25, 1097-1105.

- Kumar, A., et al. (2019). “Artificial Intelligence in Personal Assistants: A Review.” International Journal of Computer Applications, 178(10), 1-5.

- Hinton, Geoffrey. “Geoffrey Hinton – Wikipedia.” Wikipedia, October 2024, en.wikipedia.org/wiki/Geoffrey_Hinton. Accessed 10 October 2024(Wikipedia).

- “Geoffrey Hinton | DeepAI.” DeepAI, 2023, www.deepai.org/publication/geoffrey-hinton. Accessed 10 October 2024(DeepAI)

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). “ImageNet Classification with Deep Convolutional Neural Networks.” Advances in Neural Information Processing Systems, 25, 1097-1105

- Soper, Taylor. “Amazon Unveils Echo, a $199 Voice-Enabled Speaker.” GeekWire, November 6, 2014.

- Nvidia. (2020). “NVIDIA AI: The Future of Computing.” Retrieved from Nvidia.

- O’Neil, C. (2016). Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Crown Publishing Group.

- OpenAI. (2020). “Language Models are Few-Shot Learners.” Advances in Neural Information Processing Systems, 33, 1877-1901.

- Shaw, J. A., et al. (2018). “The Role of Artificial Intelligence in Mental Health.” Frontiers in Psychology, 9, 1234.

- Woolf, B. P. (2010). Building Intelligent Interactive Tutors: Student-centered Strategies for Revolutionizing E-learning. Morgan Kaufmann.

- Zuboff, S. (2019). The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. PublicAffairs.