Sudoba Mirzozoda

Natural Language Processing: The Bridge Between Humans and Machines

Have you ever talked to Siri or used Google Translate ? If so, you’ve already experienced Natural Language Processing (NLP)! NLP helps machines understand human language, making it possible for us to communicate with technology in ways we never thought possible. From chatbots that answer questions to tools like Grammarly that improve your writing, NLP is transforming how we interact with technology.

NLP is a relatively new field of AI technologies that affects the possibilities of machine understanding, as well as producing and exchanging messages in natural language. NLP is a branch of Artificial Intelligence (AI) and linguistics that enables computers to process and analyze human language.

Initially based on simple rules, it now relies on advanced machine learning (ML), making it one of the fastest-growing scientific fields. NLP is applied in tools such as virtual assistants, sentiment analysis, and machine translation.

As noted by Daniel Jurafsky and James H. Martin in Speech and Language Processing (2021):

“Language is the best natural tool for communication between humans, and NLP makes this language understandable to machines.”

This guide explores the theory, applications, challenges, and future trends of NLP.

Fundamentally, NLP is the ability to use characteristic computational methods alongside the principles of linguistics to analyse and interpret text. These building blocks include:

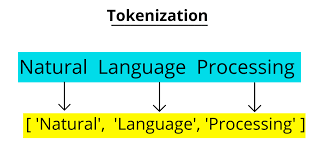

Tokenization and text preprocessing

Tokenization and text preprocessing are the main steps in preparing text and phrase databases for analysis. First, text goes through preprocessing, where noise (such as irrelevant characters, punctuation, stopwords, and other unnecessary elements) is removed, and the data is structured. Then, the text is transformed into tokens such as words, phrases, etc. Emily Bender, a computational linguist, notes in Linguistic Fundamentals for Natural Language Processing (2019) that preprocessing is important because it involves converting data from an unstructured state into a form that an algorithm can effectively comprehend.

Syntax and Semantics

When computers process language , they need to understand two things: “How Sentences are built?”Which refers to the syntax of the sentence , and “What they mean ?” which is semantic part

Syntax

Syntax is about the structure of a sentence . It helps machine figure out how words fit together to form something meaningful

Semantics

Semantics is about the meaning of words and sentences ,it helps machines understand what you are trying to say .

Machine Learning Models

Machine learning models play a crucial role in NLP , helping computers understand and generate human-like language . Example can be Word2Vec which transforms words into numbers , by grouping similar words together based on their meaning , which is useful in tasks like search engines or translations .

Another model is BERT which is a model that helps computers understand the meaning of the words by looking at the context around them, both before and after the word . It’s great for tasks like writing stories , chatbots or code generation, while Bert focused on understanding text GPT is designed for creating it . These models along with variations like RoBERTa and DistilBERT, are powering many modern NLP applications by helping computers interact with language in a more human like way

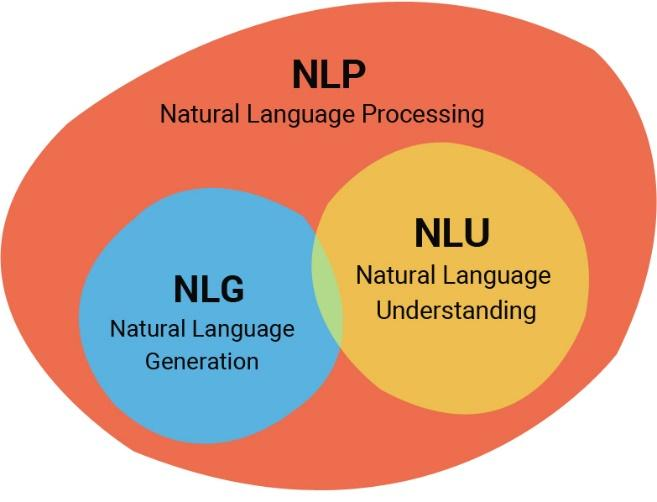

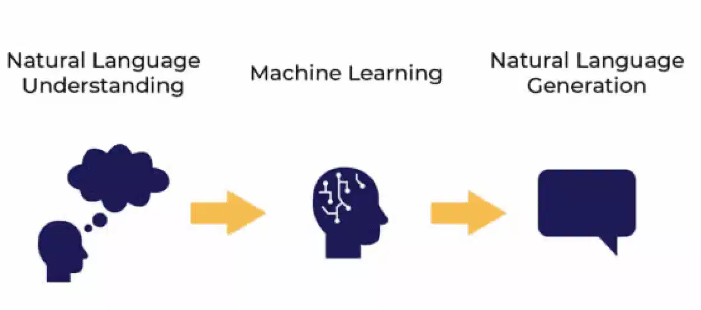

Natural Language Understanding (NLU) and Generation (NLG)

Natural Language Processing has two basic components which are Natural Language Understanding(NLU) and Natural Language Generation(NLG) most of the times they work together in order to develop a machine capable of processing and manipulating language across humans.

Natural Language Understanding(NLU)

Language comprehension has two parts, one of which is Natural Language Understanding (NLU). NLU helps machines understand the meaning (semantics) and structure (syntax) of speech or text. Key tasks include:

Intent Recognition: Figuring out the goal of a sentence. For example, in the sentence “Book me a flight to Paris,” the intent is to book a flight.

Entity Recognition: Identifying important information like names, dates, or places. For instance, in “Daler meet me at 3 PM,” “Daler” is a person, and “3 PM” is a time.

Sentiment Analysis: Determining the emotion or feeling in a sentence. For example, “I want to give this product to my mother because she loves it!” shows a positive emotion.

Text Classification: Categorizing text into different groups, like labeling spam emails as “Spam.”

Coreference Resolution: Matching pronouns with the correct nouns. For example, in “Oisha has a passion for her cat. She purchased it yesterday,” “She” refers to “Oisha” and “it” refers to “cat.”

Natural Language Generation(NLG)

NLG can be said as the other hand of NLU, as it is concerned with producing coherent and meaningful language through the help of graphs or formal models. It allows machines to generate writers’ readable and understandable texts. Such tasks are widespread:

Text Summarization: Making long documents short by compressing the most important ideas they contain.

Chatbots: Engaging in conversations and generating human-like output.

Content Creation: Automatically developed articles, reports, or any other text from the provided data.

Translation: Reading a text in one language and reproducing the same ideas in another language

In other words, NLU makes machines be able to realize what or humans’ intentions and in similar fashion NLG makes machines be able to speak to humans in an actual human way. These technologies collectively lie at the foundation of intelligent systems such as voice and chat-based assistants as well as language models.

The Building Blocks of NLP

NLP’s impact spans industries, reshaping communication and decision-making processes:

Customer Service

Programs like Amazon’s Alexa and Google Assistant use Natural Language Processing (NLP) to communicate with users. These systems use dialogue management to understand and respond appropriately to user queries. In 2018, during the launch of Google Duplex, Google’s CEO Sundar Pichai emphasized the importance of dialogue management, describing it as a key system that enables these technologies to interact effectively and naturally with users.

Education

In education, tools like Grammarly use NLP to improve writing, while programs like Turnitin detect plagiarism. Computational linguist Karen Spärck Jones, a pioneer in information retrieval, said that technologies that can understand and evaluate written work help people access knowledge more easily, making written content more accessible to everyone.

Social Media and Sentiment Analysis

Since the rise of the internet and social media, there has been much debate about its impact. Organizations use NLP to analyze sentiments from social media platforms like Twitter or Facebook. This helps them identify trends and measure customer satisfaction.

Challenges in NLP

Despite its rapid evolution, NLP faces significant challenges that limit its effectiveness:

Language Ambiguity

Human language is often unclear and depends on context. For example, the word “bank” can mean a financial institution, the side of a river, or part of a machine. Machines find it difficult to understand these different meanings, especially when it comes to metaphors, jokes, or sarcasm.

Bias in Data

It is equally known that if the training models are exercised on biased datasets, the biased model eventually produces biased results. On deconstructing the model, in what Crawford and Paglen term the Anatomy of an AI System (2018), the authors say “The bias embedded in the logic of the software algorithms corresponds to the bias of the data which informs them”.

Imagine You’re making a robot that recognizes faces. If you only teach it using pictures of people with light skin, the robot might not recognize people with darker skin. Why? Because it didn’t learn about them from the examples! That’s bias in data—the robot learns from what you show it, and if the data is unfair, the robot becomes unfair too.

Multilinguality

NLP systems often work better in English than in other languages because of differences in grammar, sentence structure, and culture. There is also less quality data available for many non-English languages. Models like mBERT (a multilingual model) and techniques like transfer learning help address these issues, but building a truly universal NLP system is still a big challenge.

Ethical Concerns

There are important ethical issues in NLP, such as privacy, fairness, and transparency. For example, AI systems like chatbots can access personal conversations, raising privacy concerns. These systems might store or misuse personal information. To avoid harm, it’s necessary to set clear ethical guidelines and make sure AI systems are transparent, fair, and free from bias.

The Future of NLP

The future of Natural Language Processing (NLP) looks very exciting. Researchers are working on new ways to make NLP systems understand not just grammar and word meanings, but also the context behind the words. This will help machines understand how the meaning of words can change depending on the situation. For example, better online translation tools will help people communicate across different languages, making global connections easier.

At the same time, there’s a growing focus on making sure NLP systems are ethical. Timnit Gebru, an expert in AI ethics, says it’s important for these systems to be fair, transparent, and unbiased (Proceedings of the Conference on Fairness, Accountability, and Transparency, 2020). There’s also excitement about new fields like multimodal learning, which combines language, images, and other types of data. This will help NLP systems handle even more complex tasks. NLP is also becoming more helpful for people with disabilities, like providing real-time captions for the deaf or creating summaries for people with visual impairments.

NLP is already changing how people interact with machines, from improving customer service to helping with medical breakthroughs. If challenges like bias and confusion are solved, researchers will be able to create even smarter systems that can understand people better. In the future, NLP might help machines understand human emotions, culture, and context, bringing people and machines closer together.

Careers of the future for Teens and Young Adults

As technology continues to advance , especially with AI and nlP , there will be increased demand for certain types of careers , while others may become less common ,like:

AI and ML Engineers , with the rise of AI and NLP there will be a high demand for people who can design , develop and improve these systems.

Data Science and analysts’ NLP systems rely on large amounts of data and data science will be needed to analyze and interpret this huge information. This is the great field for those who enjoy working with numbers and technology

Cloud Computing Experts: As NLP systems depend more on cloud services, there will be a need for people to manage and maintain these systems in the cloud.

Multimodal Technology Specialists: As NLP systems start combining language, images, and other data, there will be more jobs for people who can work with these complex systems.

Here are some careers that might be impacted by AI and NLP automation:

Customer Support Representatives: Chatbots and AI can answer customer queries, reducing the need for human agents.

Data Entry Clerks: AI can automatically process and enter data faster than humans.

Journalists/Reporters: AI can write news articles based on data, replacing basic reporting jobs.

Telemarketers: AI can make calls and handle customer interactions without human help.

Financial Analysts: AI can analyze financial data and make predictions faster than humans.

Work Cited

Bender, E. (2019). Linguistic fundamentals for natural language processing. Morgan & Claypool. https://www.morganclaypool.com/doi/10.2200/S00499ED1V01Y201602HLT032

Crawford, K., & Paglen, T. (2018). Anatomy of an AI system. AI Now Institute. https://ainowinstitute.org/aiexperience.html

Gebru, T. (2020). Algorithmic accountability in AI. Proceedings of the Conference on Fairness, Accountability, and Transparency. https://dl.acm.org/doi/10.1145/3287560.3287590

Jurafsky, D., & Martin, J. H. (2021). Speech and language processing. Pearson. https://web.stanford.edu/~jurafsky/slp3/

Pichai, S. (2018). Google Duplex: AI that can make phone calls. Google Blog. https://www.blog.google/products/assistant/introducing-google-duplex-ai-system-telephone-conversations/